How AI controls my computer and finds $10M leads for a builder in LA

From nepotism to innovation

My dad is a general contractor in LA. He just finished a $20M building and wanted more projects.

We set up an AI that controls my computer and researches leads for him. One week later, they had more to bid on than they could handle, each bid in the $10–$50M range.

How we got there was actually pretty simple.

Fix the sales environment

Create a new source of leads

Task AI to find the permits and extract lead info (to qualify the project and get contact info)

Once the basics were in place, the AI was easy. Here’s the breakdown.

1. Fixing the sales environment

Their “CRM” was a local Excel file bounced back and forth over email. ~2,000 leads with chaotic fields: duplicate columns, statuses spelled three different ways, notes in address fields, addresses in status fields.

Filtering was basically impossible.

What I changed:

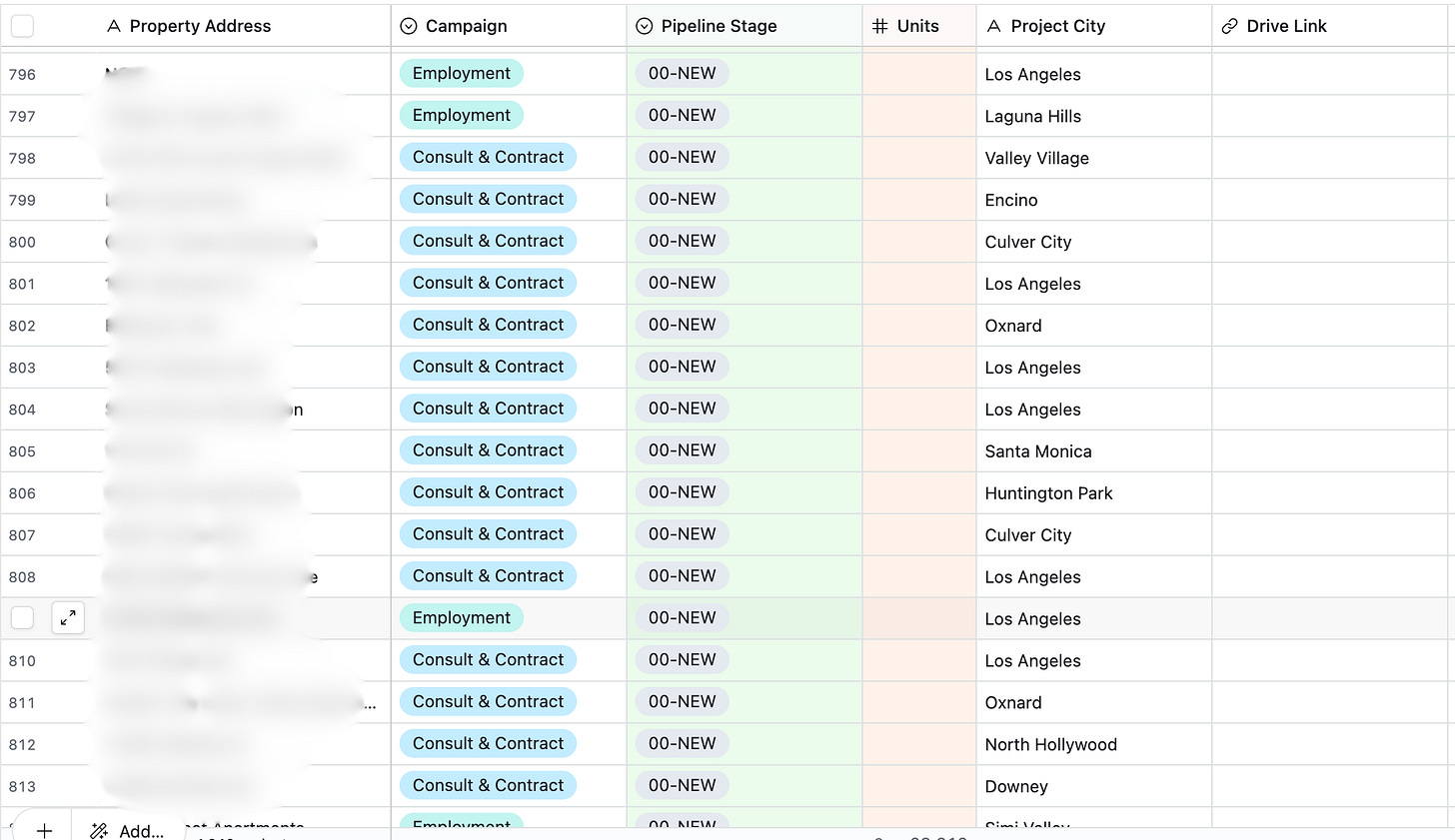

Moved to Airtable. Spreadsheet is a mess. I needed a database and the ability to create views for different phases of the process.

Data repair with scripts. A couple Python scripts did the heavy lifting:

Consolidated duplicate fields and picked the best-value data when conflicts existed.

Normalized statuses (e.g.,

LEAD / Lead / lead → Lead).AI prompt that took each records JSON, made sure the fields had the right info, and returned an updated JSON with corrections.

Interfaces for each stage. Broke the sales process into stages (New Lead, Qualified, Called, Bidding). Then I built views or interfaces for each stage so each process was silo’d and nobody was messing up each others data.

Call queue for one person. To make this dummy-proof for my 70 year old father (bless his heart) I made him an interface that ONLY showed the next lead he needed to call and had ONLY the information necessary for him to call.

Switching from “stare at a sheet of 1,000 rows and figure out what to do” to “press call on the next record” took them from a call every few days to 2–3 calls per day. That’s a ~6x action rate improvement before we touched lead volume.

This might feel incredibly basic (because, of course, it is!), but it’s important because later we are going to us AI on a bunch of data. And if you’re data is not organized and structured like my Dad’s wasn’t it’s incredibly difficult to do any kind of automation, let alone AI automation.

2. A better lead source

Builders rely on directory-style “job boards.” They’re expensive, inconsistent, and crowded. Basically all the builders in your area are also getting those leads.

So we needed our own lead flow.

We looked for Ready-To-Issue (RTI) properties on LoopNet. Projects where a developer has land & approved plans and is now selling that as a package.

Whoever buys that RTI property will build soon. So we want that lead as soon as it’s available

The flow:

LoopNet filter → email alerts.

AI reads each alert email from LoopNet, extracts the unstructured description, fills a JSON “lead card,” checks for duplicates, and creates the lead in Airtable.

A small check runs later to see if an RTI listing disappears (likely sold), the status changes in AirTable and it’s ready for the next step.

This works because we know someone is buying that property to build it. But they haven’t open the project up for the rest of the contractors yet. So we can be there early and build a relationship.

Developers like this because they really need estimates to map out their financing before they get hard bids from builders. Us being there to give those estimates gets us a lot of face time on these big projects.

But we are not there yet.

3) Using Browser-Use to get the permit info.

Why permits? Permits tell you if a project is actually moving. For LA, a BLDG-NEW record is a strong buying signal. It effectively means they are telling the city they’d like to get started building.

Permits also give you a lot of lead info and often list owner/filers, contacts, and sometimes links to plans.

That said, LA’s permit system (ZIMAS) isn’t bot-friendly. Parameters don’t yield stable deep links, forms must be filled in anew, and records are… creatively duplicated (the same address can show up multiple times with different fragments of history).

So I couldn’t build a generic scrape. I needed to use AI.

What we did:

Created a Python environment (using UV) to run this project.

Got the Browser-Use repository set up.

In a single script pulled the relevant leads from AirTable

Started a for loop that took these steps.

Structured prompt with relevant data from record.

Ran my prompt using Browser-Use

Updated the AirTable record with info from permit.

Here is the prompt we gave browser-use

''' I'm going to give you an address, I need you to go to the Los Angeles City permit records website and find the latest BLDG-NEW permit associated with this address.

This is the address: {address}

Here are the steps you will need to take to get the most recent permit.

1. go to zimas.lacity.org

2. on the search pop-up, put the house number in the house number field, and the street name in the other field. Make sure to follow the formatting directions stated on the website.

3. click "Go"

4. Once the address is found, on the left sidebar, click the drop down "Permitting and Zoning Compliance"

5. In that dropdown, click "View"

6. On the new screen, click the plus icon to expand the "Permit Information Found:" section

7. If multiple addresses are showing, expand ALL of them.

8. Find the BLDG-NEW permit with the most recent date in the "Status" field across each address.

9. Click the link in the "Application/Permit#" section of that record

10. Return the URL of that page and the date of the permit application

'''And this worked out pretty well. It would either not return the link because it didn’t exist, or it did return it but the most recent one was still years ago, or it would return it and it was recent.

That link would get brought into our AirTable where we could grab the rest of the info when we needed.

And that’s pretty much it. This was the largest bottleneck in the sales process. Once we automated it we had deleted the work that took up 80% of our time. What was left was incredibly easy.

Notes from the build:

Smaller models struggled with the unpredictable UI states (cookie banners, shifted menus). A larger reasoning model handled it reliably.

It’s slower than a human on a single record, but it runs 10–12 hours straight. One 12-hour run cost about $200 in tokens and was absolutely worth it given deal values.

After tuning, we went from 1–3 new leads/day to spikes of 24 qualified/day (we paused the system once they had 10 serious bids in motion).

Thoughts

It was pretty cool to build an AI automation that took over the computer and did lead research.

I don’t know if I would do it for most use cases.

It worked here because we were able to really eliminate and define what step of the process was costing the most time.

In other words, we found the bottleneck and it was solvable.

I’d do it again if it called for it.