Essentialism is Key to Successful AI Implementation.

Fix the Gap Between Your AI Expectations and Your AI Implementation

You don’t want an everything agent.

Most of your results can come from a few simple automations.

If I’m exaggerating, it’s not by much. And it’s understanding this basic 80/20 within AI that will stop you from drowning implementing this new tech, that’s still basically brand new, in your work.

The practical framework below comes from building lots of very real automations and systems using AI.

So let’s go over how to build simple AI automations that get results and actually reduce the amount of time you work instead of increase it.

1. Scope Clarity

The fastest way to build a great automation with AI is to shrink the problem until it’s easily solvable.

It’s cheating. But it always works.

The everything agent that knows every part of your business sounds magical.

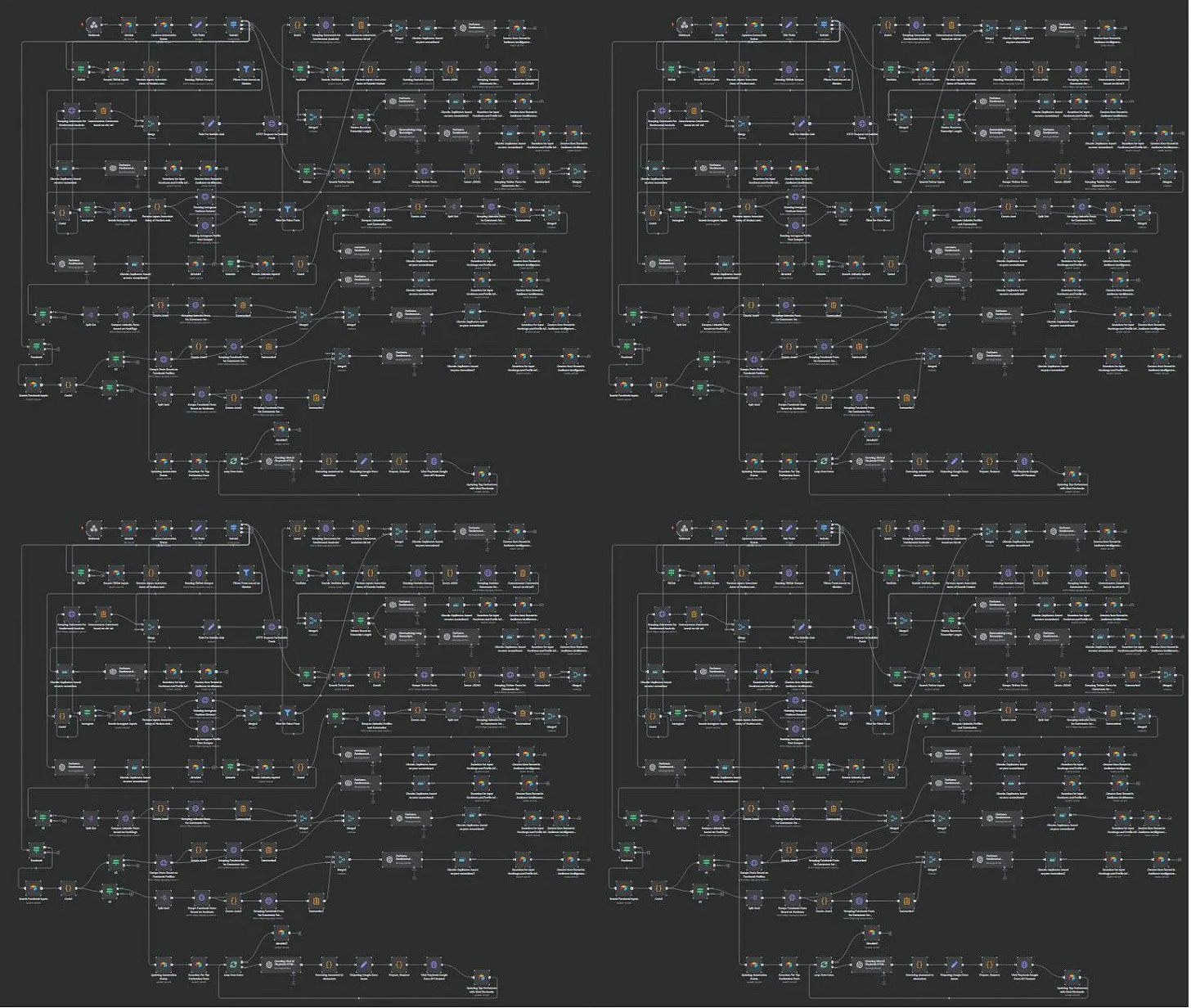

I found this automation on Reddit. I bet the person who build this was thinking “I want to build an automation that runs my whole business”.

The reality is maintaining this automation is now this persons full time job. They may or may not ever get to running their actual business.

Instead, focus on making the scope narrow and testable.

Define the single outcome.

What is the “done” deliverable?

Bad Example: “Read and reply to all my emails using company knowledge”

Good Example: “Read emails from active clients, if response is needed, look through client project documentation to find answer or add task.”

Describe success in plain language.

Write a quick test to measure the results against.

Maybe take a manually created deliver from the past (an actual example of how you responded to client question about project status) and use that as a rubric to measure the AI against.

Example: “Given an email from an active client, we pull live project facts and produce a reply that cites timeline, blockers, and next step.”

Identify the inputs (Context Required)

Where is the truth? (CRM, Airtable, Gmail labels, Notion, Asana, ClickUp, etc...)

Manage your clients & projects in Notion? Then that’s where you will task the Agent with looking.

Let’s be honest… Are you actually putting all the info about your client and project there? Or is only some of it there? The AI output will fall to the quality of the Inputs.

List the process steps

Filter incoming email based on Active clients → Summarize the client request → Decide where to look for answers, based on workspace documentation (Client docs, client projects, client comms, etc..) → Write email draft answering client question.

Example reframes

❌ “Read my emails and handle whatever is needed.”

✅ “When an email from an active client arrives, fetch their current project row and draft a reply using live dates and status, then save as Gmail draft.”

❌ “When New Lead Comes In, Research The Company”

✅ “When new lead comes in, find the number of employees, when they raised their last financing round, at what valuation, and identify if they are currently regularly posting social media content, running ads, and writing blog posts. Based on that info, chose one of my three qualified ICP categories they fall into if any.”

At the end of the day, building automations IS engineering. And engineering doesn’t work in generalities.

You don’t build theoretical bridges. You build real ones.

2. Delete

Once you are clear on the scopes of the automations you want to build, ask yourself whether or not this automation and/or each of its steps should really be built.

And do this at every level.

Whether or not to automate a thing is a business strategy question.

For example, before you build a responding to client email automation you may want to ask yourself “Do I really want to provide my clients 24/7 email support?”

Are you sure it’s not better to just give your clients live access to their project board?

If yes, then ask yourself the same thing with each step of your proposed automation.

Do you really need to reference past client comms?

Watch out for is edge cases.

“90% of clients buy package A, but 10% have package B. And package B will have different steps to the automation.”

These kinds of forks are dangerous. They are innocent enough at the beginning, but as things grow they create a lot of problems.

There are 2 ways to handle this:

Generalize the automation enough to handle both cases in the same workflow.

Delete package B and be grateful your business in simpler now.

Apply “Minimum Necessary Context.”

More context ≠ better answers.

Large, messy inputs create contradictions and drift. Only give your prompts the context required to accomplish the task.

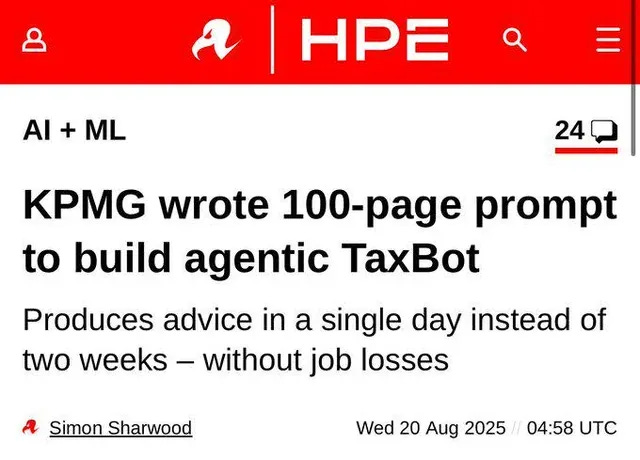

Don’t be KPMG:

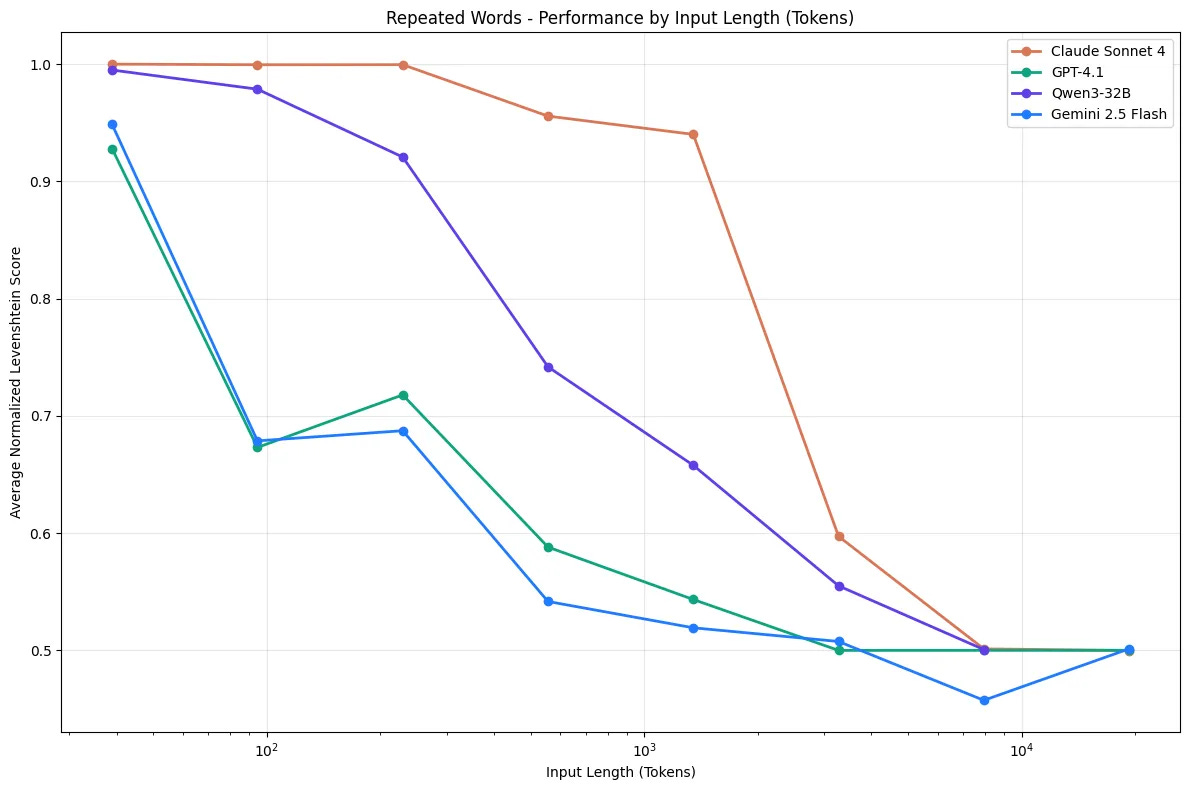

Why? Because AI performance declines the more context you give it.

It’s called context rot.

So really ask yourself if all the context you intend to give your AI automation is really necessary, or if you can limit it in some way to only what matters.

3. Automate

Once you’ve broken things down to their smallest-useful-workflow, it’s then time to automate it.

Note: You won’t have simplified things completely, even though you tried, and that’s ok. It is often easier to simplify while revising the automation than while designing it. Chin up.

Another Note: I use N8N for my automations and I’m very happy with it. I’ve tried most of the other options and just pick the one you like working with the best.

Here are some rules I follow while building automations

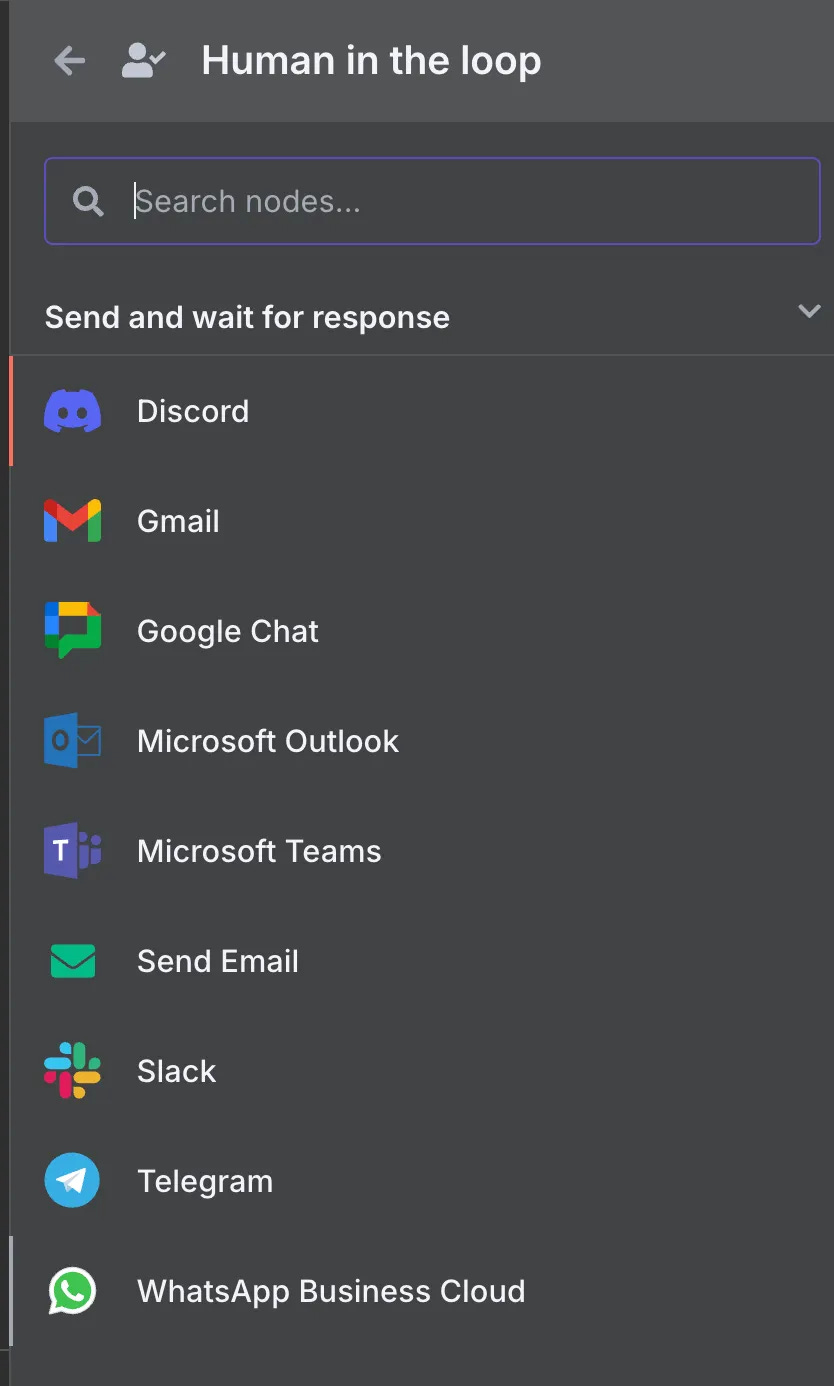

Human in the loop by default.

Drafts saved, posts queued, statuses set to “Needs Review.”

Don’t let AI publish or email externally without your approval. Especially early on.

After you’ve seen tens or hundreds of cases, and worked out all the errors, you can consider this.

Or identify the cases in which AI can send the output live or send to you for review.

You can do this by either:

Have completion steps that are one step before pushing live: Write an email draft instead of send email, or put in Notion with “needs review” status instead of publishing.

Using in built human in the loop steps in N8N (or similar)

Don’t Use AI Where You Don’t Need To

If you don’t actually need AI for a step. Don’t use it.

For example, don’t have an AI agent search for client docs if you already know the client and can just run the search directly to Notion before hand.

It only invites room for error.

Code is deterministic. If conditions are met it produces the asked for result every time.

AI is probabilistic. If conditions are met, it will still fail sometimes.

Test your prompts on the front end

Once you publish your automation, you’re not going to want to continuously edit it. You just want it to work.

So make sure you really test your prompt.

Check how it handles the different inputs.

Make the adjustments on the front end.

Measure it’s performance against the deliverable standard you set at the beginning of this process.

Error notifications on

This is a simple hack.

Make sure you are looking at your error notifications from your automation tool, and debug you automation.

Set up error messages in Slack or where ever you will see them.

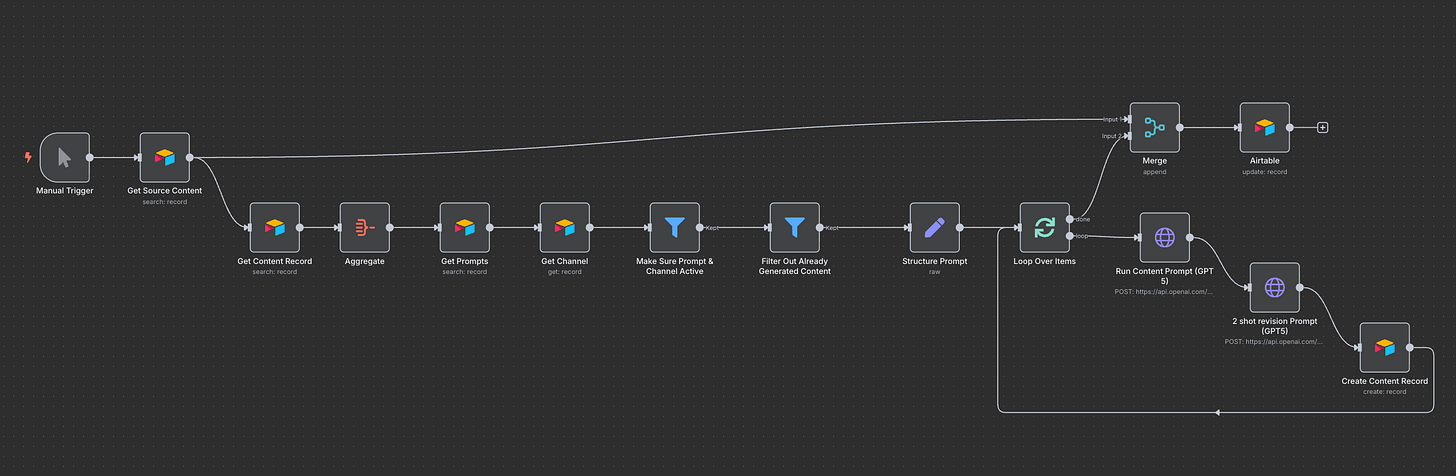

For some inspo: this automation creates all my content for me.

It’s not the most beautiful automation out there. But it’s mine and I love it.

Over the weekend it wrote over 600 social media posts for me.

4. Connect

Don’t build big workflows.

Build small ones and connect them.

Building small automations is easier. It also means your automations are more durable. And that makes your system as a whole more durable.

When each automation is stable (low errors, high success), here is how to link them together in a coherent system.

Trigger chaining (sequential)

Essentially, workflow A finishes → it triggers Workflow B.

And example might be an automation that puts new leads in your CRM, notifies you in slack, and then triggers another automation that gathers research on the lead.

Most automation platforms like N8N or Zapier will have the option to trigger another workflow and pass data into it.

The separation of these automations gives you more use cases.

Maybe most of your leads come from TypeForm. So the first automation adds them to the CRM.

But what if you meet a new lead at a conference?

Because the research automation is separated, you can create a different form for a different scenario that will still trigger your lead research automation.

(like texting an agent the lead info or something.)

Manipulating a common database

Automations that change information in the same database are inherently connected.

They are generally triggered when data hits a certain state.

For example, my content automation runs when I have new source content with the status “In Que.”

And they generally end by changing data in the database. Like changing a record status to “complete”

Where you can build another automation that looks for records with that status and do whatever with it.

This is the simplest way in which your automations will be connected, especially if they are all in the same area of your business - For example, all your sales automation will probably change data in your CRM and be connected that way.

Why this is a better system design than a big automation

This makes your automations way easier to debug. Failures are way easier to identify.

If you’re business changes and you have to pivot your process around, you won’t lose your whole automation.

It’s also way less intimidating. You can make improvements one workflow at a time.

Conclusion

When I follow these principles, I end up building useful automations:

Scope Clarity gives me outcomes I can test in minutes.

Delete removes as many chances of failure and chaos before starting. Also just don’t build things I don’t need.

Automate with a human-in-the-loop keeps me safe while I learn edge cases.

Connect turns tiny reliable pieces into a system that scales with me.

Hope this was useful for you. Feel free to reach out and let me know your thoughts!